One of my learning objectives for 2021 was to figure out just what the hell this reinforcement learning thing is and how it works. Having built a couple of chess games in the past I was fascinated by Google's Deepmind initiative and the success it's had in building agents that can compete with the best human players of certain games. AlphaGo was the first foray into this space, but they've since moved even further with the AlphaZero and MuZero initiatives that aim to master broad selection of games without encoding explicit rules. Super interesting stuff. This series of posts aims to document what I've learned while exploring the world of deep reinforcement learning with applications and code for a couple of specific projects and goals. First, a primer to get up to speed on what reinforcement learning actually is.

Reinforcement learning (RL) is a specific application or class of a machine learning problem where, broadly speaking, the goal is to achieve a long-term objective. Using the specific application of AlphaGo, the objective is to beat your opponent. The rules of the game define an environment and a specific set of actions that you can take in pursuit of this objective. The typical RL problem ecosystem can be summarized using the following diagram.

Environment

The environment is a catch-all term for the context of the problem space. In the context of the Go example, it's the game board, definition of the rules and the actions that you can take. In the context of a retro video game (which is where I'll be building some concrete examples) it is the emulator and software, the ROM file and all associated components.

Agent

At each time step the agent may take a certain subset of actions, these actions alter the state of the environment, transitioning it to a new state, and producing a reward.

The reward is a scalar value that is proportional to the pursuit of the long-term objective. Actions that advance the agent closer to achieving that objective produce positive rewards, actions that detract from the objective produce negative rewards (or penalties). Specifically it's an implementation of The Reward Hypothesis , stated simply

"That all of what we mean by goals and purposes can be well thought of as maximization of the expected value of the cumulative sum of a received scalar signal (reward)"

The agent makes a sequence of decisions (or takes a sequence of actions) for which it is rewarded or penalized based on the reward function. It is the optimization of this reward function over many series of states that is the problem that reinforcement learning is seeking to solve. This article actually explains the key concepts behind reinforcement learning quite well.

In the subsequent posts I'll explore the right hand side of this diagram, by using both classical and neural network approaches to solve policies related to learning how to play retro video games. For now, let's take a look at some of the algorithms we can utilize to solve problems in the reinforcement learning space. There are two broad categories of RL algorithms: model-free and model-based.

Model-based RL algorithms rely on either learning or being provided with a model of the environment, that is, a function that predicts state transitions and associated rewards. These types allow the agent to strategize ahead multiple time steps and are useful when rewards are sparse or only able to be computed after multiple actions have been completed. These types of approaches were used for AlphaGo and AlphaZero where there is already an existing model of the environment for the algorithm to reference. Games with concrete rulesets are a good fit for this type of learning, as are retro games such as Tetris (as we'll see in subsequent posts). These are a particularly poor fit for most retro games as we are unable to provide a concrete model of a given environment; indeed, a ground-truth model is rarely available for most real-world RL problems.

Model-free RL algorithms in contrast are more robust and easier to implement as they allow customization of reward functions and finer grained tuning of parameters. These algorithms rely on trial-and-error experimentation, collection of experiences and outcomes and a well-defined reward function to approximate the optimal policy. A drawback to these approaches are that they're typically sample-inefficient, requiring a lot of experiences in order to recreate anything close to human performance. I'll largely be focusing on the model-free RL algorithms in subsequent posts. Here's a brief description of the one I focused on for my case studies.

Proximal Policy Optimization (PPO)

The academic paper that outlines this algorithm is actually a great and (relatively) easy read, so I'd encourage you to take a look once you've familiarized yourself with basic reinforcement learning concepts. I'll try to summarize here as concisely as possible.

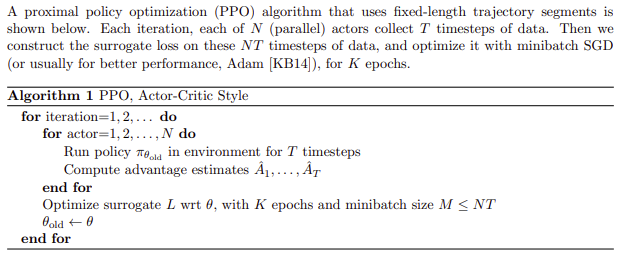

The following algorithm summary, taken directly from the paper is the most concise version.

The algorithm operates by creating N agents that work in parallel. These agents collect T timesteps of data (where T is significantly shorter than the average episode length), by running the existing policy, θ. This data consists of observations of the game state, actions taken and rewards generated. The policy θ is updated at the end of every epoch by optimizing the loss function using stochastic gradient descent or Adam optimizer. The key piece of the algorithm is the introduction of two terms that control for the size of the policy update at each timestep; the clipped surrogate objective and the adaptive Kullback–Leibler divergence. One or both of these can be used. In experimental setting the clipped surrogate objective performed better for their use cases.

The next post in this series will walk through how we can get an environment set up in Python for experimenting with reinforcement learning in the context of retro video games.

Comments